Dr Amr Ahmed, HoS of Computer Science (UNM), was invited as “Keynote Speaker” and “Guest of Honour” to the “International Conference on Advanced Computing Techniques and Applications (ICACTA 2020)” on 5th and 6th March 2020. The conference was held in Bangalore, India, and was organised and hosted by the Reva University. Amr initially delivered a short inaugural speech and then a full keynote speaker, which included an overview of research in the school of Computer Science (UNM). During the inaugural session, Amr was treated with the traditional “Mahraja” hat and robe, as in the photo, which is traditional in Bangalore, India. Amr had various discussions with the organisers and other guests who expressed interest in research collaboration.

Dr Amr Ahmed, HoS of Computer Science (UNM), was invited as “Keynote Speaker” and “Guest of Honour” to the “International Conference on Advanced Computing Techniques and Applications (ICACTA 2020)” on 5th and 6th March 2020. The conference was held in Bangalore, India, and was organised and hosted by the Reva University. Amr initially delivered a short inaugural speech and then a full keynote speaker, which included an overview of research in the school of Computer Science (UNM). During the inaugural session, Amr was treated with the traditional “Mahraja” hat and robe, as in the photo, which is traditional in Bangalore, India. Amr had various discussions with the organisers and other guests who expressed interest in research collaboration.

Plenary Talk: International Conference for Crops Improvement 2019

Invited to the ICCI2019 (International Conference for Crops Improvement 2019), at UPM (University Putra Malaysia), Dr Amr Ahmed (HoS of Computer Science, UNM) delivered a Plenary talk. The talk titled “Machine Vision & AI; from Health to AgriFood”. The main theme of the conference, this year, was “Sustainability through Data-Driven and Frontier Research”. Closely linked to our research within Computer Science in Nottingham Malaysia.

Invited to the ICCI2019 (International Conference for Crops Improvement 2019), at UPM (University Putra Malaysia), Dr Amr Ahmed (HoS of Computer Science, UNM) delivered a Plenary talk. The talk titled “Machine Vision & AI; from Health to AgriFood”. The main theme of the conference, this year, was “Sustainability through Data-Driven and Frontier Research”. Closely linked to our research within Computer Science in Nottingham Malaysia.

New paper accepted in ICPR 2014 – “Compact Signature-based Compressed Video Matching Using Dominant Colour Profiles (DCP)”

The paper “Compact Signature-based Compressed Video Matching Using Dominant Colour Profiles (DCP)” has been accepted in the ICPR 2014 conference http://www.icpr2014.org/, and will be presented in August 2014, Stockholm, Sweden.

Abstract— This paper presents a technique for efficient and generic matching of compressed video shots, through compact signatures extracted directly without decompression. The compact signature is based on the Dominant Colour Profile (DCP); a sequence of dominant colours extracted and arranged as a sequence of spikes, in analogy to the human retinal representation of a scene. The proposed signature represents a given video shot with ~490 integer values, facilitating for real-time processing to retrieve a maximum set of matching videos. The technique is able to work directly on MPEG compressed videos, without full decompression, as it is utilizing the DC-image as a base for extracting colour features. The DC-image has a highly reduced size, while retaining most of visual aspects, and provides high performance compared to the full I-frame. The experiments and results on various standard datasets show the promising performance, both the accuracy and the efficient computation complexity, of the proposed technique.

Congratulations and well done for Saddam.

Analysis and experimentation results of using DC-image, and comparisons with full image (I-Frame), can be found in Video matching using DC-image and local features (http://eprints.lincoln.ac.uk/12680/)

Best Student Paper Award 2013 – WCE 2013

Congratulations to Saddam Bekhet (PhD Researcher) who achieved the “Best Student Paper Award 2013″ for his conference paper entitled “Video Matching Using DC-image and Local Features ” presented earlier in “World Congress on Engineering 2013“ in London .

Abstract: This paper presents a suggested framework for video matching based on local features extracted from the DC-image of MPEG compressed videos, without decompression. The relevant arguments and supporting evidences are discussed for developing video similarity techniques that works directly on compressed videos, without decompression, and especially utilising small size images. Two experiments are carried to support the above. The first is comparing between the DC-image and I-frame, in terms of matching performance and the corresponding computation complexity. The second experiment compares between using local features and global features in video matching, especially in the compressed domain and with the small size images. The results confirmed that the use of DC-image, despite its highly reduced size, is promising as it produces at least similar (if not better) matching precision, compared to the full I-frame. Also, using SIFT, as a local feature, outperforms precision of most of the standard global features. On the other hand, its computation complexity is relatively higher, but it is still within the real-time margin. There are also various optimisations that can be done to improve this computation complexity.

Conference paper presented WCE’13 – 3rd July 2013 – London

The paper (titled “Video Matching Using DC-image and Local Features”) was presented by Saddam Bekhet (PhD Rsearcher) in the International Conference of Signal and Image Engineering (ICSIE’13), during the World Congress on Engineering 2013, in London UK.

Abstract:

This paper presents a suggested framework for video matching based on local features extracted from the DC-image of MPEG compressed videos, without decompression. The relevant arguments and supporting evidences are discussed for developing video similarity techniques that works directly on compressed videos, without decompression, and especially utilising small size images. Two experiments are carried to support the above. The first is comparing between the DC-image and I-frame, in terms of matching performance and the corresponding computation complexity. The second experiment compares between using local features and global features in video matching, especially in the compressed domain and with the small size images. The results confirmed that the use of DC-image, despite its highly reduced size, is promising as it produces at least similar (if not better) matching precision, compared to the full I-frame. Also, using SIFT, as a local feature, outperforms precision of most of the standard global features. On the other hand, its computation complexity is relatively higher, but it is still within the real-time margin. There are also various optimisations that can be done to improve this computation complexity.

Well done Saddam.

Conference paper Accepted to the “World Congress on Engineering”

New Conference paper accepted for publishing in “World Congress on Engineering 2013“.

The paper title is “Video Matching Using DC-image and Local Features ”

Abstract:

This paper presents a suggested framework for video matching based on local features extracted from the DC-image of MPEG compressed videos, without decompression. The relevant arguments and supporting evidences are discussed for developing video similarity techniques that works directly on compressed videos, without decompression, and especially utilising small size images. Two experiments are carried to support the above. The first is comparing between the DC-image and I-frame, in terms of matching performance and the corresponding computation complexity. The second experiment compares between using local features and global features in video matching, especially in the compressed domain and with the small size images. The results confirmed that the use of DC-image, despite its highly reduced size, is promising as it produces at least similar (if not better) matching precision, compared to the full I-frame. Also, using SIFT, as a local feature, outperforms precision of most of the standard global features. On the other hand, its computation complexity is relatively higher, but it is still within the real-time margin. There are also various optimisations that can be done to improve this computation complexity.

New Journal paper Accepted to the “Multimedia Tools and Applications”

New Journal paper accepted for publishing in the Journal of “Multimedia Tools and Applications“.

The paper title is “A Framework for Automatic Semantic Video Annotation utilising Similarity and Commonsense Knowledgebases”

Abstract:

The rapidly increasing quantity of publicly available videos has driven research into developing automatic tools for indexing, rating, searching and retrieval. Textual semantic representations, such as tagging, labelling and annotation, are often important factors in the process of indexing any video, because of their user-friendly way of representing the semantics appropriate for search and retrieval. Ideally, this annotation should be inspired by the human cognitive way of perceiving and of describing videos. The difference between the low-level visual contents and the corresponding human perception is referred to as the ‘semantic gap’. Tackling this gap is even harder in the case of unconstrained videos, mainly due to the lack of any previous information about the analyzed video on the one hand, and the huge amount of generic knowledge required on the other.

This paper introduces a framework for the Automatic Semantic Annotation of unconstrained videos. The proposed framework utilizes two non-domain-specific layers: low-level visual similarity matching, and an annotation analysis that employs commonsense knowledgebases. Commonsense ontology is created by incorporating multiple-structured semantic relationships. Experiments and black-box tests are carried out on standard video databases for

action recognition and video information retrieval. White-box tests examine the performance of the individual intermediate layers of the framework, and the evaluation of the results and the statistical analysis show that integrating visual similarity matching with commonsense semantic relationships provides an effective approach to automated video annotation.

Well done and congratulations to Amjad Altadmri .

Visit to Xidian University

Amr was invited to visit Xidian University, by the Director of International Cooperation and Exchange.

Nice tour around the university and research labs.

Exhanged information on research interests and projects.

Discussion on potential collaboration.

Amr was invited for another visit (to be hosted by Xidian University) in the near future, for research presentation.

Nice present, and delicious dinner.

ICMA’10 Conference, Xi’an, China

Amr chaired “Computer Vision” sessions in the ICMA’10 conference in China, as was invited by the Conference and Program Chair.

Nice place to be, although really hot and humid!

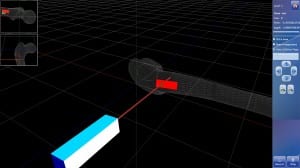

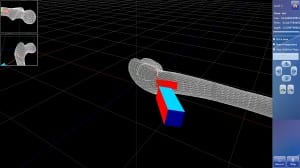

Masterig DHS

Mastering DHS is an initial prototype for

virtual training of Junior surgeons for the DHS (Dynamic Hip Screw) surgery.

virtual training of Junior surgeons for the DHS (Dynamic Hip Screw) surgery.

It aims at improving the Hand-eyes-Brain coordination by providing a computerised training system, constructed from affordable Commercial Off-The-Shelf (COT) componenets.

This work is in collaboration with Prof. Maqsood, Consultant Trauma and Orthopaedic surgeon in the Lincoln Hospital.

Project in the media/press:

- http://www.ehi.co.uk/news/EHI/7643/lincs-surgeons-practice-on-wii-hip

- http://www.thisislincolnshire.co.uk/Nintendo-Wii-technology-helps-train-surgeons/story-15542080-detail/story.html

- http://www.ulh.nhs.uk/news_and_events/ulh_news/2012/ULH%20News%20Spring%202012.pdf {Page 5}

Conference paper here: http://amrahmed.blogs.lincoln.ac.uk/2012/05/02/conference-paper-accepted-dhs-virtual-training/

For further details, please contact:

| Contact details | On the Web: |

| Dr Amr Ahmed School of Computer Science University of Lincoln Brayford Pool Lincoln LN6 7TS United Kingdom |

On Academia: http://ulincoln.academia.edu/AmrAhmed |

| On ResearchID: http://www.researcherid.com/rid/A-4585-2009 |

|

| Email: aahmed@lincoln.ac.uk Tel.: +44 (0) 1522 837376 Fax.: +44(0) 1522 886974 |

My Web Pages: http://webpages.lincoln.ac.uk/AAhmed |